Trusting ChatGPT with Your Confidential Data…A Safe Bet?

3 Minute Read

A Bad Bet!

Let’s get the correct answer out of the way right off the bat. Using ChatGPT as-is, out-of-the-box, no strings attached – you should never be putting any confidential information into ChatGPT. Why, you ask? What a great question!

You see – ChatGPT uses what’s called an LLM (Large Language Model… check out my other post on LLMs for more info there). An LLM has data…not just a little bit of data. We’re talking billions of rows of data. ChatGPT obtained its data from all of these publications that have been posted over the years (New York Times v. OpenAI) – it took those publications, broke them into pieces that the LLM can understand, and then spent years “training” the data. Teaching this model what certain things mean – asking it questions, and then replying whether or not the LLM got the answer right. This is what is meant by “training” when you’re talking about LLMs.

Training the trainer.

Now – let’s fast-forward to that really confidential piece of information that you want to put into ChatGPT to have it analyze and spit out a response to you. ChatGPT, as-is, out-of-the-box…is set up to train its data model with every single question you ask ChatGPT, which then gets added to its LLM! In essence, whatever you put into that LLM, someone else theoretically also has access to. But there are ways to protect yourself!

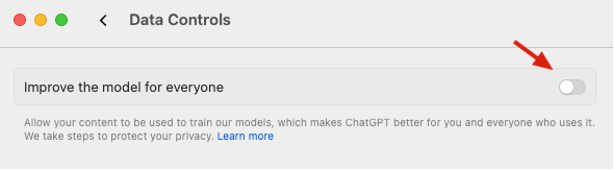

First – ChatGPT has an option, under your profile → Settings → Data Controls, to tell ChatGPT not to use any of your queries to train data. This needs to be turned off, first and foremost.

All done, right? Easy peasy? Not quite – all those queries that you put into ChatGPT, notice how they’re still there when you log out and back in? That’s right, ChatGPT saves those too. While previously ChatGPT let you “Turn Off Chat History,” that option has been removed. You now either need to manually delete your queries – or use Temporary Chat (which still stores your queries but auto-deletes after ~30 days). Since there’s no way to prevent ChatGPT from saving history, there’s no surefire way to put confidential info into ChatGPT and never risk a leak. Doing these two steps above, however, reduces the risk significantly.

Now that we’ve covered your own personal use of ChatGPT – let’s touch on some SaaS.

What to look for when buying SaaS Generative AI (GAI) solutions.

If a vendor outright claims that they offer GAI solutions, and it’s safe to put PII or confidential information into their platform… definitely do your homework! As you have just learned above – there’s currently no surefire way to put confidential info into ChatGPT without risking a leak. In the current market I am seeing vendors who claim that you can query confidential information securely – only to find out, they have merely flagged off in Data Controls to not train against the queries and they spin it that they “have a contract in place”. I don’t know about you but for me personally – that’s simply just not good enough.

To any SaaS vendor claiming you can query confidential information safely, ask them the following 3 questions:

- In the event my confidential information is leaked through your product, do you own the damages?

- Tell me, how do you keep my confidential information safe?

- If I log out of your application and back in, are my prior queries still there?

If the vendor you are vetting claims that they cover damages for any confidential leaks as a result of their product – I’d be wary, and at the very least I’d get that in writing before you make any decisions. What this question does do – it opens the door to have them explain from a technical perspective, assuming they have one, how their GAI solution keeps your confidential information safe. The best safety net is a solution that prevents the confidential information from ever being sent to begin with.

Rubber meets road.

In the end, when rubber meets the road – the only true-to-date option to keep your confidential information safe is to have parameters in place before you submit the query. Your only other option to safely query confidential information is in a closed LLM, meaning an LLM that was created with your own data…and not tying to an Open LLM like ChatGPT. A closed LLM is like a private sandbox where you control who’s invited to play while ChatGPT’s open LLM is like a public park…anybody can come in, bring whatever they have, take whatever they want. Keep your data safe – once confidentiality is gone, it’s gone.